OpenAI Is Doing Ads While Anthropic Is Doing Unemployment

The Weekend Leverage, Jan 18th

Vindication. Sweet, sweet vindication. As a newsletter writer, much of my value comes from my ability to forecast the future. Thankfully this week proved I haven’t lost my edge. The news we’ll cover today—ads, new content slop, AI’s economic impact on jobs—is stuff my long-time readers saw coming.

This year started with a bang. An incredible amount of stuff is happening. I’m here to tell you what mattered and what you need to do about it.

But first, this edition is brought to you by returning sponsor Box.

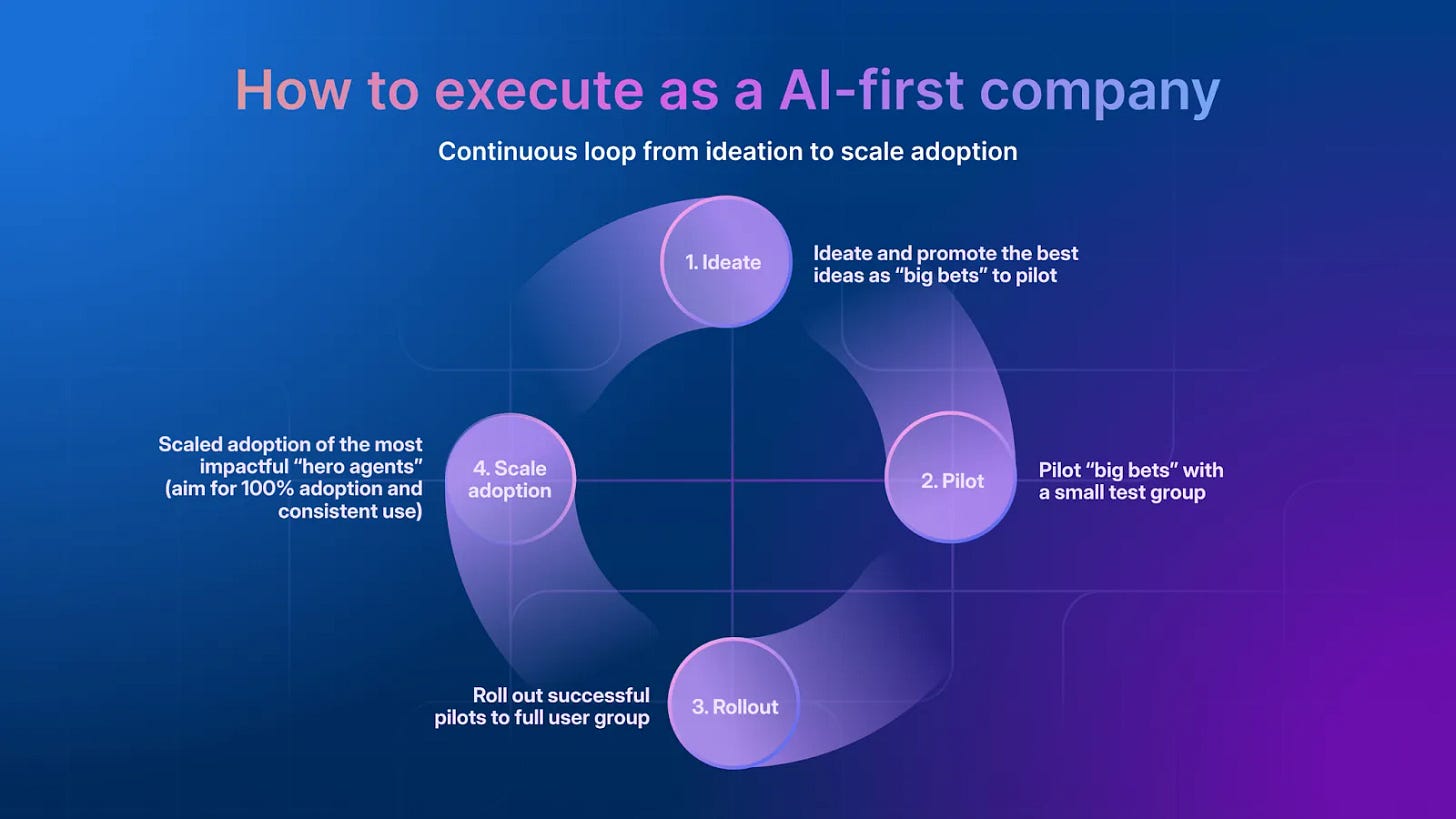

Box, the leading Intelligent Content Management company, is breaking down how it structures experiments, frameworks for AI principles, and more in its Executing AI-First series.

In it, you’ll discover how to turn a raw idea into a strategic AI powerhouse, and get a step-by-step playbook for empowering teams to thrive in the era of AI.

In this series, you’ll learn:

How Box approached becoming AI-first through its value realization strategy

How to deploy agents with an Ideate>Pilot>Rollout>Scale plan

How to identify and empower AI managers

How to measure what matters by tracking AI agent impact

Dive into Box’s Executing AI-First series and follow along for actionable insights and downloadable templates.

MY RESEARCH

Google wants to commoditize everyone. Everyone’s favorite search engine just released a technical standard that allows AI agents to easily navigate and purchase goods on the web. The strategy is a repeat of the idea that made them a nearly four trillion dollar company—to make everyone but themselves nearly unprofitable. They want to commoditize their complements. This would seem like a wise move, after all, there is the whole four trillion thing. But I have some doubts about whether this paradigm will neatly translate to LLMs. (Bonus, results from my own experiments in AI and ads!) Read it here.

2026 is the year AI becomes real. The last few years have been about partially fulfilled potential. Yes, ChatGPT is insanely popular, but revenue and GDP growth from AI have disappointed relative to investment. This is the year all that changes. AI is going to dramatically reshape our politics, our businesses, and how we perform knowledge work. This is a video essay that I put together on the topic. Please like and subscribe on YouTube—it makes a big difference for the channel. Watch here.

WHAT MATTERED THIS WEEK?

BIG TECH

OpenAI embraces the ad monster. On Friday, Altman & Co. announced they’d launch ads in the coming weeks within ChatGPT for free-tier subscribers. This had to happen. As always, free-to-paid conversion in consumer apps hovers below 10%, so the tens of billions they were leaving on the table by not running ads were simply too tempting to ignore.

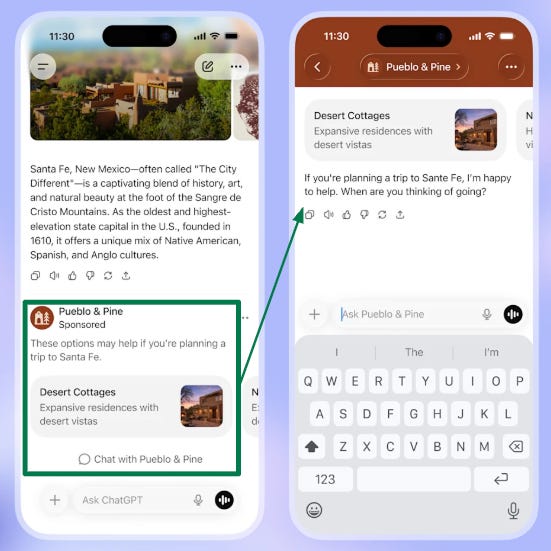

The interesting part is the formats they’re promoting.. Look at the screenshot below:

The first thing you’ll note is that about a third of a result is an ad! That is a lot of real estate. They also have the typical CTA button to visit the advertiser’s website, but there’s something novel: ‘Chat with [INSERT ADVERTISER].’ Here, you can click to open a nested chat where the screen indicates you’re talking with whoever sponsored that query.

This raises a thorny question. While OpenAI’s ads principles say that, “Ads do not influence the answers ChatGPT gives you. Answers are optimized based on what’s most helpful to you. Ads are always separate and clearly labeled.” Is it still considered a ChatGPT answer when the advertiser is answering the questions? After all, it is within the ChatGPT app, running on OpenAI models.

This question matters because a really helpful LLM will clearly list tradeoffs of a purchasing decision. Almost to the advertiser’s detriment! In my own experiments, I had users churn because the LLM convinced them that The Leverage was not a good fit for their needs.

¯\_(ツ)_/¯

Beyond that, will the system prompt (the document steering the LLM’s personality and purpose) be changeable for advertisers? As a business owner, I certainly hope it will. LLMs are not particularly effective at sales. They are great at analysis! They are great at recommendations! But they are terrible at pitching. If I’m paying for customers to chat with my brand, I want to know the bot is pitching only my product and communicating the way I want. Which, by default, will decrease people’s trust in the ChatGPT because it alters the incentives and personality of the bot.

Ads are an absolute necessity for AI, but the details are still unclear. Good thing this publication has been researching the topic since day one! You can read my framework for thinking about AI ads, and you can listen to a podcast here where the most successful AI ad company reveals how they built a multimillion-dollar business in just a few weeks.

THE SLOPPENING

A new brain-liquefying media format is starting to grow in the West. Microdramas are short 1-to-3 minute stories that are kinda like if soap operas and YouTube shorts had a baby. Each episode starts with an eye-grabbing visual, something like a pot breaking, or bright flashes of color. Then the worst actors you have ever seen in your life start to flirt with each other.

In China, over 690 million people watch microdramas. The venture ecosystem has started funding Western competitors. Holywater, creator of the microdrama app ‘My Drama,’ raised $22 million this week. Finally, TikTok launched a microdrama app called “PineDrama” in the U.S. this week. It’s already popular. Their top series “The Officer Fell For Me” has 193 million views, while the second most popular “Remarried at 50, My Husband Turns Out To Be A Billionaire” has 93.6 million views. Again, this app has been out for like a week.

For the sake of this newsletter, I downloaded all of these apps and subjected myself to a few hours of watching this stuff so you wouldn’t have to. I would describe my experience using the following image from SpongeBob:

These microdrama apps are the natural end state of the sloppening. This is content algorithmically designed to do one thing: engage your lizard brain. The quality bar is so low that I think someone could use AI video models to make all of this stuff and the audience wouldn’t notice.

I recognize that there have been moral panics about screens ever since the television was invented, but come on, if this doesn’t raise concern, what will?

AI RESEARCH

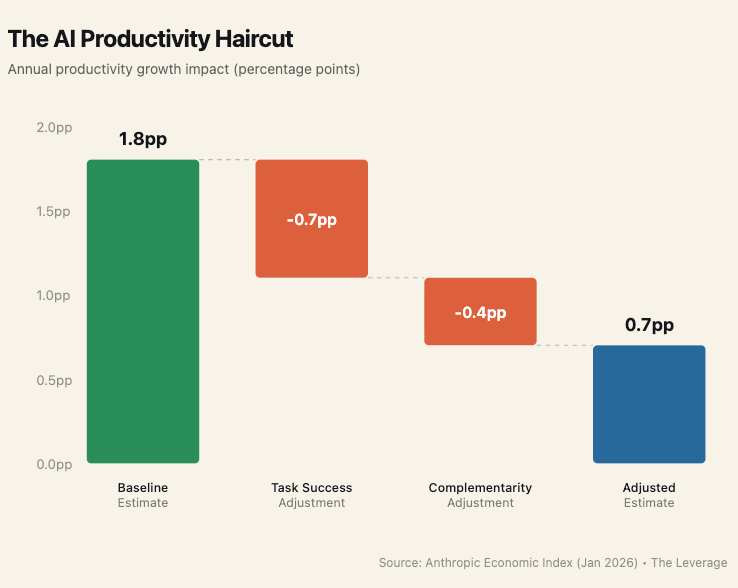

Anthropic’s new AI productivity data confirms the estimates are overstated—and they’re the ones saying it.

Anthropic analyzed over a million conversations for their fourth Economic Index report, released this week. The headline number you’ll see in the NYT is that “AI could add 1.8 percentage points to annual productivity growth.” But unlike most AI research, Anthropic didn’t stop there. They also tested what happens when you model tasks as complements rather than substitutes. The result: that 1.8pp drops to 0.7–0.9pp. Adjust for task success rates and you’re at 0.6pp.

Reading it, I kept thinking about a January NBER paper from Joshua Gans and Avi Goldfarb called “O-Ring Automation,” which argues that standard AI exposure estimates are “mathematically inconsistent” with how work actually functions. Jobs are not a bundle of tasks added together—they’re multiplicative. When you add a bottleneck, it constrains everything else. Anthropic’s data is the first large-scale empirical confirmation of Gans-Goldfarb’s idea. Which means that every McKinsey or Goldman report you’ve seen over the last few years is a dramatic overestimation of AI’s impact on productivity.

Equally interesting is where those bottlenecks are. They found that Claude disproportionately handles tasks requiring higher-education. So travel agents may lose complex itinerary planning but they still need to be at the copier grabbing tickets. The net effect across most occupations means deskilling and lower wages. However, Gans-Goldfarb predict the opposite outcome for wages when the remaining tasks are genuine skill bottlenecks. Real estate managers lose bookkeeping and CRM management but keep contract negotiation and sales—tasks that get more valuable when you can focus entirely on it. (This is why ATMs created “super-negotiator” bank tellers instead of unemployment lines, as I explained in my previous video).

There are two things I’m doing differently as a result of this analysis. First, I’m now applying an automatic 50% discount on productivity projections from AI unless they clearly control for the bottleneck problem. Second, I’m performing an AI audit for my own job with each new model release. Is there a bottleneck that was just removed? Is the trajectory of improvement going to leave me with something economically valuable to do? If the remainder is commodity work, you have a problem. If it’s a genuine bottleneck—judgment, negotiation, taste—you’re about to become more valuable.

TASTEMAKER

AN ALBUM THAT RIPS

I’ve been listening to Bleeds by Wednesday all week. The album is worth discussing for its extreme contrasts. The genres mashed together here are wildly ambitious. They are using pedal steel guitars most popular in bands like Lynyrd Skynyrd or The Allman Brothers Band. That is somehow blended with noisy, aggressive rock to make something as catchy as a pop song from Carly Rae Jepsen. How do they do that?? That alone would make the album special, but the lead singer Karly Hartzman delivers her own inventive lyrics with a simple, pure voice. Most artists today would say something like “Baby, why did you fly away?” Instead, Hartzman will write something like “I drove you to the airport with the E-brake on.” I missed this when it came out in September, but I’m retroactively adding it to my best albums of 2025 list. Check out Elderberry Wine if you want to sample their sound.

ONE LAST THING

I’ve written a number of essays I’ve been too nervous to publish. Some are spicy but adjacent—Trump’s effects on tech markets, or the argument that founders often need to be mildly sociopathic to win.

But I also have a separate pile of essays that share the anima of this publication but sit outside what I explicitly promised when you subscribed—book reviews of stuff like Atlas Shrugged or Byung-Chul Han’s Non-things, reflections on Mormonism and its relationship to tech, and essays on the institutionalization of miracles. They feel spiritually aligned, even if they’re not strictly on-premise to The Leverage.

So the question is whether you’d be interested in me occasionally going a little broader here. I genuinely don’t know whether The Leverage can sustain both deep technical research and work that’s more philosophical, introspective, or cultural, but I’m interested in finding out. Let me know!

Have a great week,

Evan

Sponsorships

We are now accepting sponsors for the Q1 ‘26. If you are interested in reaching my audience of 34K+ founders, investors, and senior tech executives, send me an email at team@gettheleverage.com.

I love everything you write and personally would LOVE to see the other stuff too ... But touching politics or other stuff now is toxic and I would hate to see you lose people on that.

How about you start another newsletter that it is for free to start with (you can subtly promote here) and then if it takes off you can charge for another subscription.

👍🏼 adjacent essays