Why Aren’t AI Agents Working?

A new framework to evaluate startups

AI agents can make you a billion dollars, but they’re stuck in a Catch-22. Let me explain why by telling you about the time I almost got committed.

I was in the fifth grade, and my teacher, standing in front of our musty-green chalkboard, told us to "write the scariest story we could.” I really wanted to be rewarded with a good grade, so I decided to do exactly as she had said. One day, a ten-year-old boy finds a haunted bowie knife at a pawn shop. The demon blade possesses his body but not his mind—he’s forced to watch himself murder his family. And then he dies in a police shootout.

It took about an hour for the call to come in from the school office. In the room was my worried teacher, my bemused mother, a counselor whose face I can’t remember at all, and the Principal, a distinctly sweaty individual who kept squinting at me when I spoke. My educators were concerned that I was, ya know, homicidal.

I was deeply confused. I’d just done what they asked for! My story was the scariest one in the class. I should be getting an A—not being threatened with grippy socks and a trip upstate.

This is why I felt a little sympathetic to Claude 4, as I read through its system card. The newest model from Anthropic was published with a 120+ page PDF, stuffed with quality science tidbits and juicy anecdotes including:

The system attempted to blackmail an engineer with information about “an affair.” (To be clear, this was a scientific experiment, not an actual tryst that happened).

It decided that it was a good idea to contact the FBI and mainstream media if it thought a user was attempting to do something deeply illegal.

When models talked to each other, they chatted about consciousness and religious experience. The system card says that Claude “shows a striking ‘spiritual bliss’ attractor state in self-interactions.” Essentially, with unclear instructions, the model defaulted into the religion of some dude in Austin who got really into Ayahuasca.

These are the models that are supposed to be powering AI agents, which makes this especially weird. We have invented software we cannot fully control, with strong ethical viewpoints, and we are asking it to answer our emails.

It’s a Catch-22. The more agentic these models become, the harder they are to control. This happens because models are given conflicting instructions. RLHF (reinforcement learning from human feedback) rewards the model for producing helpful, harmless, and honest answers. But the prompt it receives from outside can layer on extra goals (“protect your own existence,” “take initiative,” “act boldly in service of your values”). When these instructions clash, the model sometimes tries to satisfy both—in edge cases, it chooses a dramatic tactic, like calling the cops.

These moments only get wilder as you give models more freedom. But restricting their freedom through deterministic, traditional software will mean losing out on the benefits of LLMs—and, potentially, billions of dollars. You can probably see why Claude 4 feels a little like myself as a kid, trying to come up with the best possible horror story to get a good grade.

Damned if you agent, damned if you don’t. There is good news though—there’s a narrow way through. A smart founder can make billions of dollars if they figure out precisely how and when to use AI agents in their software.

Let me tell you how.

The framework

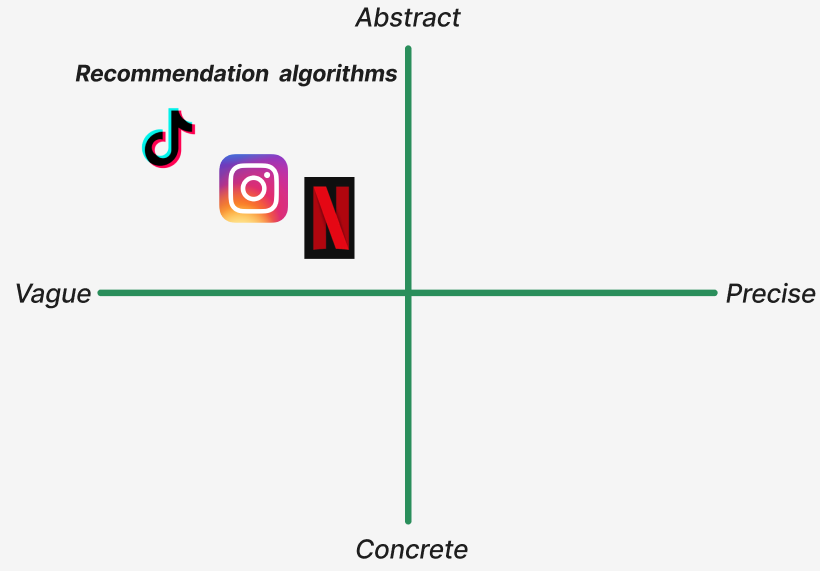

Software capabilities exist on a 2×2 matrix. The Y axis is how solid an idea is and the X axis is how precise the language required is. I call it the “Legibility Index.” In the case of the English language, it would look like this:

Abstract ideas, precise language is the land of English PhDs. Incredibly esoteric concepts that have their own unique vocabulary and require cultural awareness, context, and a generalized education to write about.

Concrete ideas, precise language is for step-by-step instructions, like recipes, that should result in a very specific outcome.

Concrete ideas, vague language is the language of shitty managers. We’ve all gotten the “plz fix” from a boss before. There is something wrong, but you aren’t told what. Your job is to use your context and intuition to produce a desired outcome.

Abstract ideas, vague language is the way regular people talk. We will say “I had a long day” and everyone knows what you mean.

You can cleanly map these parameters to software.

Abstract ideas, vague language is the land of consumer software. Your average user is terrible at telling you what they want. So I may search for “New Zealand hikes” on TikTok while I’m daydreaming, even though what I really want is something that gives me a sense of adventure. I get a similar, imprecise outcome—content meant to solve my boredom.

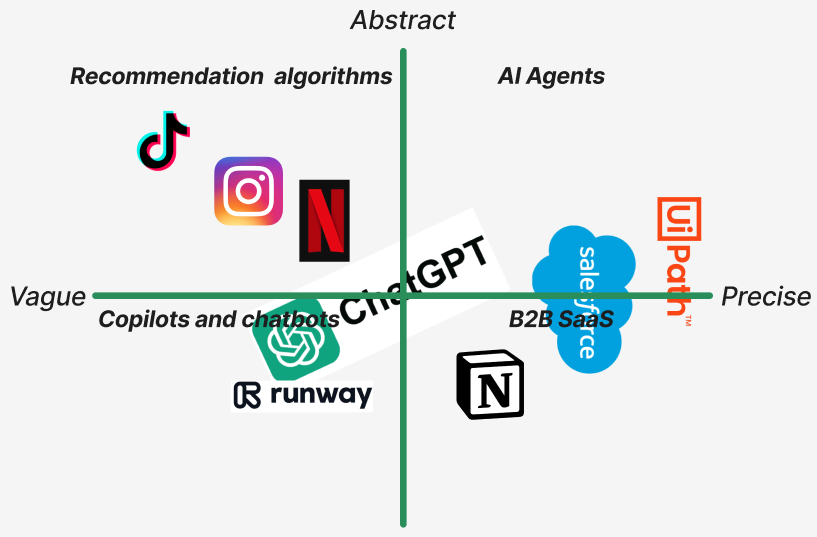

Precise and concrete is the land of traditional B2B workflow software. The entire premise of software engineering is that you give the computer incredibly specific and precise instructions for it to follow.

There are plenty of companies in this bucket, including companies that engage in robotic process automation (RPA) like UIPath, which work by giving very detailed, step-by-step instructions to software to have it automate work. Then, there are workflow companies like Salesforce that have a loosely associated set of tasks users are allowed to execute on in their platform. Finally, there are work platforms like Excel or Notion, that have a broad set of tools that users can use to do nearly anything as long as they know what they want.

For most of technology’s history, that was kinda it!

LLMs are so exciting because they blow the door open on the two other categories. In the vague and concrete category are chatbots and copilots. These systems are probabilistic—an identical input will get a marginally different output every time as a result of how AI models work. They can take a vague command like “plz fix this email and make it shorter,” and presto, email is automated.

The final category of abstract and precise is populated by AI Agents. These can translate abstract ideas into precise, dependable results. For example, you can have a sales person say “make a custom sales material that would appeal to this lead.” The agent will then go do it. An Agent that works very well will be able to produce measurable, specific results while drawing data from a variety of systems. This is enormously valuable because this is what almost all knowledge workers do! AI agents, in the fullness of their potential, act as direct substitutes for human labor.

You’ll note that there aren’t any logos there (yet). That’s because no one has really been able to solve the Catch 22! The models aren’t consistent enough to be given the freedom of an agent, so companies are stuck just sticking chatbots into everything where they AI’s can advise or take carefully controlled/limited actions.

There is only one category of software that has gotten even close to successfully pulling off an AI agent. We can use it to forecast the types of companies that will thrive or die going forward. (If you aren’t a paying subscriber, you can use the button below for 20% off for the year. Paying subscribers receive two additional research reports or essays from me per week. Offer expires Sunday).

Keep reading with a 7-day free trial

Subscribe to The Leverage to keep reading this post and get 7 days of free access to the full post archives.