Why Sam Altman Has to Let ChatGPT Get Horny

The Weekend Leverage, October 19th

The entire staff of The Leverage (aka me) has learned a hard lesson this week. If the food tastes weird, and has been in the fridge for too long, order Doordash. As such, we were lighter on the publication volume then I would like, but we saw multiple cases where this publication’s archive was proved prescient. As always, your subscription allows you to know the future first.

This edition we’ll cover:

Why OpenAI is allowing erotica but not racism on its apps

The unit economics of kitchen robots

How a company whose founder is accused of espionage was able to raise $300 million

Two spookily prescient TV shows for our time.

But first, this week’s edition is brought to you Nat Eliason’s Build Your Own Apps With AI.

Turns out I can build apps now. Wild.

I know Nat personally, and when he launched an early version of this course on effective vibe coding, I figured I’d give it a shot. Zero expectations. I’m not a developer—never have been.

A few hours later, I had a working app. Not a prototype. Not a broken mess. An actual functioning app that solved a real problem in my workflow.

Here’s the thing: with Claude and ChatGPT doing the grunt work, building software is suddenly... manageable? Fun, even? Nat strips away all the confusing parts and shows you how to get stuff done, and how to get through the kinds of issues that often cause people to quit. You also get $500 worth of premium software tools bundled in, including Cursor Pro.

If you’ve been sitting on app ideas or want to automate parts of your work, the barrier has never been lower. This is the moment.

MY RESEARCH

Great companies are grown. The spreadsheet promised me answers. Twelve months as a father, six months as a founder, and I’ve spent most of that time trying to optimize my way to happiness—copying the playbooks of people who won at life and convinced me I could too. It doesn’t work. You can’t process-engineer greatness into existence, whether it’s a company or a life worth living. The messy, frustrating, occasionally magical truth is simpler: the only path that works is the one that looks like you.

WHAT MATTERED THIS WEEK?

PUBLICS

Apple just doesn’t believe in the Vision Pro. The company released a new edition of the VR headset this week with the same price, a new headband, and the M5 chip. This lack of any real innovation is a crushing disappointment and a real indictment of the leadership team. I used the previous generation of the Vision Pro pretty extensively—it is by far the best way to consume media when you are by yourself. Watching Mad Max: Fury Road on it was one of my favorite cinematic experiences ever. Watching sports highlights on it is otherworldly. That said, the company has resolutely refused to commit to making this thing great. There has been no VR streaming of sports. No original Apple TV shows for Vision Pro users. No killer games they’ve developed. There is zero, zilch, nada.

Couple this limp release with the news that Apple is diverting resources away from the Vision Pro toward their Meta Ray Ban competitors, and this waffling is baffling. They seem to have overlearned the lesson of the iPhone. Yes, third-party developers made it great, but when it first launched, it did so with great first-party apps. I wish they had put aside a few billion dollars and really just gone for it. The technology was/is special but the content ecosystem is so lacking that I can’t recommend this purchase to anyone. Perhaps the strategy is to wait until the M series chips get advanced enough that they can cut down on the weight of the device before investing in content? But if that is the plan why release this thing in the first place? It is baffling product management. Couple this with their horrendous efforts in AI, and this is a company exhibiting no guts, no risk appetite, and no real desire for glory.

AI LABS

No racism on my porn app. This week videos of Martin Luther King Jr. fighting Malcom X with monkey noises playing in the background went viral. In response, OpenAI stopped Sora from generating videos of MLK. Simultaneously, Sam Altman announced that ChatGPT would soon be able to generate erotica for its users. At first glance, these policy actions seem in contradiction. Both are examples of users using AI to generate content that some people would find politically or morally objectionable. The simple difference is that Sora is a distribution network, and OpenAI doesn’t want to promote racist garbage. That is all well and good.

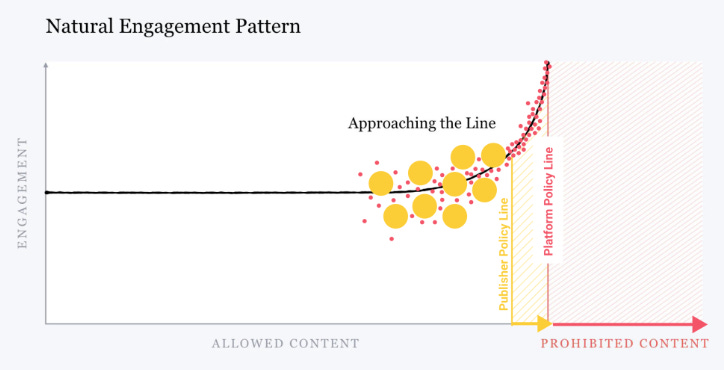

However, both of these products are in the attention business, and in the attention business, the ultimate antagonist is human nature. If Sam Altman had been subscribed to me sometime over the past few years, he would’ve known this would happen. In 2021, I proposed a new framework for internet competition called “Double Bind Theory.” Research at Meta showed that, “no matter where we draw the lines for what is allowed, as a piece of content gets close to that line, people will engage with it more on average—even when they tell us afterwards they don’t like the content.”

This creates a double bind. The website with the most permissive content policies will aggregate the most attention, but in so doing, will attract negative social feedback loops. Those negative feedback loops mean that large media companies will struggle to remain edgy because professional publishers can’t take that risk. Meanwhile, individual creators will happily gamble their livelihoods on hot takes, despite always being one cancellation away from financial ruin. It’s part of the reason Joe Rogan beat every major media company in podcasting. He gets more attention because he platforms more “unacceptable,” or edge case stuff.

In the 2010s, those negative social feedback loops were strong enough that content moderation policies on all platforms had relatively clear boundaries. Today, with the rise of X, and a general swing back toward conservative and populist tendencies, those policies are less restrictive. Bundle that with how AI drops content creation costs to zero, and you have degenerate content liftoff. Everything edgy becomes commonplace. Its a race to the bottom.

That means companies have to examine their product suite to see how close to the mucky bottom they are comfortable being. Because Sora has an algorithmic recommendation feed, they have to have more content boundaries in place. ChatGPT, however, does not have that! So, OpenAI and Grok and everyone else is incentivized to make their AI bots as horny and sycophantic as is strategic.

You may not like this. I certainly don’t! But ultimately the battle you are fighting here is against humanity’s nature. To really fix this issue, you have to elevate the human condition first.

LAUNCHES

AI coding, brought to you by Blue Apron? In July I shared these sentences, “Why shouldn’t my coding chatbot be sponsored by AWS? If a developer is working on integrating payments, Stripe should have an AI agent they can sponsor to do the coding for you.” This idea received multiple responses that I can only summarize as, “Evan, u dumb.” It seemed very natural, to me, that the high cost of running coding agents would be subsidized by ads. Well, behold, that exact product launched this week. Amp Free is a coding agent that helps developers produce more code, and it does so, for free.

Developers are an immensely valuable, notoriously difficult audience to contact so there will be appetite for ads. Plus, developers also have to consent to their code being used for training data for AI models, which could end up being even more valuable than the ads themselves. Amp already has a bunch of users, so it has the demand side of the ad market taken care of, and as such, it looks like the supply side of ad dollars came together with 10+ quality sponsors signing up to start.

Where this gets really interesting is what an ad-sponsored AI agent workflow looks like. Will database providers bid against each other to be the automatic backend on app builders? (Yes.) Will stablecoin providers bid against each other to have their APIs suggested when a developer is building a payments app (Again, yes, of course they will.) Typical ads are targeted on the basis of user demographics—workflow ads can be targeted on the basis of workflow characteristics. That is something wholly different and unseen in ad markets before.

The dirty secret of enterprise marketing is that most social ads simply don’t work. You have to get in customers’ faces with brand building events, in newsletters they trust, or some alternative angle. Integrating ads directly into workflows will save users thousands of dollars in costs and make growth easier for companies. Win, win.

If you are building ad-supported AI companies, please reach out! I would love to talk to you and feature you.

DEAL VIBES

Growth papers over all sins. In my time in Silicon Valley, I saw many companies cover for founders and executives in cases of sexual harassment, drug abuse, and a list of ethical violations so long that it makes my toe hair curl. The reason these incidents don’t get publicized are twofold:

Power laws: There are very, very few companies that realize billion dollar exits. No matter how satisfying the moral high ground feels, it doesn’t pay the bills. VCs who publicly go against someone lose the opportunity to invest in that person, their ideas, and their new companies in the future.

Legal liabilities: Because a startup is a gordian knot, weaving together founders’ personal and professional lives, mistakes made by senior executives could destroy a company. It is often cheaper for the company, and better for employees, if these ills are never brought to life, lest they destroy the organization entirely.

Anyway, a company accused of RICO violations, espionage, and security fraud, whose CEO fled Europe for Dubai in fear of legal reprisals, raised $300 million at a $17.6 billion valuation this week. Deel does payroll and human resources software. The company does north of $1.2 billion in revenue a year and has a “70 percent growth rate.” Even wilder, growth accelerated after the lawsuits were filed. This is a financial profile so righteous that of course people wanted to invest—espionage sins be damned.

To steelman this: Every company of sufficient size will be subject to lawsuits. They are just too big not to. And if you are a venture investor, your job is to deliver returns first and foremost. The legal risk for Deel is just downward pressure on the price of the company, and investors probably determined that it was relatively minor. I can understand how investors get here, but legal risk is not the same as doing the right thing.

$12 million for a robot dishwasher. The company named “Armstrong” not to be confused with Evan Armstrong, the sexy, yet kind-hearted author of this newsletter, raised $12 million to build robots focused on kitchen tasks. Right now, they just do dishwashing.

In many ways, this is an ironic thing to use robot arms for. We already have robots that do the dishes. They’re called dishwashers! Instead, it looks like these arms load the plates into dishwashing trays and then unload them. It’s a slight but important expansion into the task. Its why we have to be so careful when we assume a “task” is automated. The boundaries of workflows are porous, and automation of one component simply moves the human labor bottleneck further up or down the labor line.

More broadly, the company is emblematic of the hard tradeoffs for robots. Take monetization: The company sells a subscription to the robot, maintenance included, for “$1.5k - $3k/month, depending on size of restaurant.” The company then brags that “30-40 hours/week of labor” are saved by restaurants. Average wage for a dishwasher in the U.S. is about $15, so the company is offering about $1,800-2,400 in labor replacement savings. So as a restaurant you are saving, at most, a few hundred bucks depending on layout—which is why the company talks about saving money on water usage in the sales pitch. You don’t bother talking about ancillary benefits if the robot’s primary cost savings are all that great. Ultimately the company isn’t selling the automation of dishes, it is selling the automation of managing dishwashing employees. It is very tough to find a dishwasher because, well, the job sucks. No one wants it! Restaurants are paying for the ability to not have to worry about staffing, cost savings is just a bonus. In that regard, the bet for this company is on high employment rates and a reduction in immigration.

For the company itself, the subscription model means they will always and forever be cash-constrained. Closely watching their videos, I think, they are using the X Arm 7 which retails for $10,499. Throw in a few grand of custom grippers and sensors, and it feels reasonable to say that you are looking at $14,000 an arm. Each Armstrong station takes 3 arms, so you are looking at $42,000 per station. Let’s give ourselves a generous, “Evan is a dum dum” discount and say Armstrong can get their costs all the way down to $30,000. At their max subscription price of $3,000 a month, you are at a ten month payback period! Add in sales costs, maintenance, and R&D, and suddenly you are looking to not be positive from a cash flow perspective for over a year. Brutal.

You put both of these little napkin math exercises together and you get a sense for how hard robots are to do. You need a crack technical team to power the technology, a well-tuned sales motion, and a CFO who is an absolute wizard with cash flow management. Not for the founders who are weak at heart. Investors are likely betting on an expansion of tasks the robot can do, and decreased cost of the robot arms as they scale. Personally, I’m cheering for them! I hate doing the dishes.

TASTEMAKER

While laying on the couch sick this week, rebellion was on my mind. What does effective political action look like? Is it the No Kings march that happened yesterday? Is it the more violent riots in Nepal that were organized over Discord, which led to over 50 people dead and the first female Prime Minister? So, I decided to watch some genre fiction to let the ideas simmer.

“You know how you can tell the difference between a masked cop and a vigilante? No? Me neither.”

HBO’s Watchmen is the rare prestige TV show that actually earns its pretensions—it’s a brilliant remix of the graphic novel that weaponizes superhero mythology to dissect America’s original sin of racial violence, centering on the 1921 Tulsa massacre in ways that make you genuinely uncomfortable. The show’s central conceit of cops wearing masks to protect their identities from retaliation is chef’s kiss prescient given our current moment: it forces us to reckon with the power asymmetry baked into modern policing, where officers operate with anonymity and legal protection while citizens are surveilled and identified at every turn.

“The pace of repression outstrips our ability to understand it.”

I checked out of Star Wars years ago. Disney ruined it and I wasn’t willing to check in. Andor is the exception here. This isn’t just good genre fiction, it belongs among the finest TV shows ever made. Writer Tony Gilroy poured everything he’s ever learned about revolt into Andor. He’s said the show came from a lifetime of “popcorn history”—all the little facts and patterns about how uprisings actually start, spread, and eat their own. Instead of mythic heroes, he wanted to show the forgotten people who make rebellion possible—the fixers, smugglers, and martyrs whose names don’t make it into the scroll. Andor is Gilroy’s master thesis on revolution: messy, bureaucratic, and human. Incredible show.

Have a great week,

Evan

Sponsorships

We are now accepting sponsors for the winter. If you are interested in reaching my audience of 35K+ founders, investors, and senior tech executives, send me an email at team@gettheleverage.com.

"the only path that works is the one that looks like you." So true. I read the book "Why greatness cannot be planned" last year and was struck by the intuitive of-course-ness of the thesis. Greatness can only stumbled into. Any striving for it takes you away from it.

But to live into one's uniqueness is... kinda painful! So full of uncertainty, of doubt. But to do anything else is to live life as a shadow, an echo of someone else's path. So how to manage? Total doubt, total confidence.

"People ask for the road to Cold Mountain,

but no road reaches Cold Mountain.

Summer sky– still ice won't melt.

The sun comes out but gets obscured by mist.

Imitating me, where does that get you?

My mind isn't like yours.

When your mind is like mine

you can enter here."

– Hanshan, https://en.wikipedia.org/wiki/Hanshan_(poet)

12 month CAC Payback is awesome, top tier, especially for a product with hardware

The top ten median cac payback for software is 2 years. Median is more than 3 years.